Battleship - Gaming with AI, locally in my Browser

Exploring local-first architecture for agentic apps with LivestoreJS

Tech Stack

Gaming with AI, locally in my Browser

Everyone is asking AI all sort of things everyday. Most of the AI features, however, will not work when you’re offline, nor give you any good answers before you send all your private data to someone’s random hosted server.

You might have heard of geeks running their own AI locally, with Ollama or LMStudio on GPU cluster or a Mac Studio with M3 Ultra (probably from me). It has to be simpler. To explore that I built a game where I can play against AI that run locally in my browser, or on my turn-key BYOC (Bring your own cloud) serverless environment that also synchronize across my devices.

If the Chrome T-Rex Dinosaur rings a bell — Yes this game is something you can play completely offline when you’re on a plane. But you have an opponent, a smart one.

Be Local-first. Not dismissing the power of the mighty cloud, but make that optional, enable applications stays ultra fast and data stay private in user’s environment, just like Obsidian the editor I use writing this blog post. It’s about Data ownership and user empowerment, as Admin Wiggins put it.

The Game and the TechStack: Battleship, on LiveStoreJS

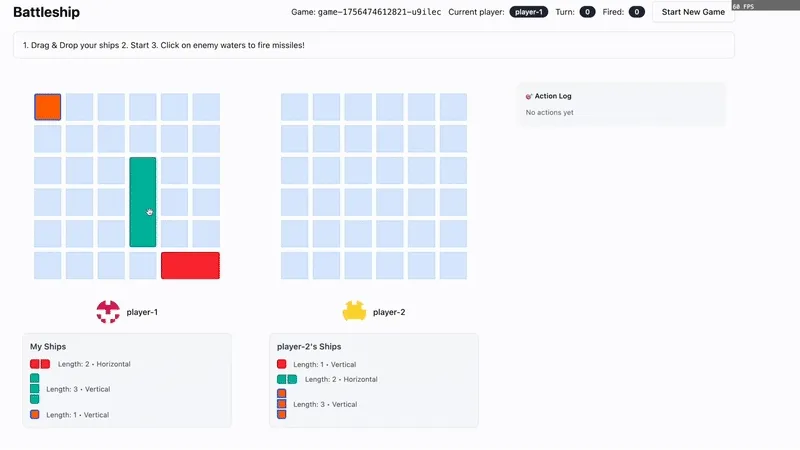

I pick Battleship the classic naval strategy game, as it has simple rules and it is something I played during my childhood. Players take turn to fire missiles to hit opponent’s hidden ships on a grid.

Check out the demo on Cloudflare Worker

The game is built on LiveStoreJS, a local-first state management framework with event-sourcing and embedded SQLite in web worker. In fact, I’m intrigued to build the game when I read “LiveStoreJS is a great fit for turn-based games” & agents.

Architecture of LiveStoreJS resonates strongly with lessons I’ve learned the hard way

-

My stack is often CQRS based - From analytics platform to EventBridge or Kafka I preferred

SomethingHappendunified domain events that I can easily reason about at anywhere. -

Event-sourcing is often the “right” thing to do, but also a hard thing to do. Caching, aggregation and conflict resolutions are best offloaded to dedicated sync engine

-

I love the reactive, sleek mental model of Mobx and RxJs at UI, but that comes with quite some gotchas and limitations in ergonomics that limited productivity.

-

Often applications can be modelled after a state machine with event transition. Turn-based games are the obvious ones. Once I built a PvP card game with xstate, the declarative nature make it easier to design, track winning criteria, set up guardrails and avoid race conditions.

-

Maintaining servers is often a turn-off for side projects. Firing up some PaaS with credit card sounds good only at that weekend you kickstart your project but not when a few months later you’re too busy.

With LiveStoreJS, everything is modelled as events which materialized into local state, accessible in react hooks. That translates into an ultra fast UI and great DX. Deployment of sync server is fairly simple with options like Cloudflare which is highly performant and cost-efficient, enabling multi-device sync and data snapshot out of the box.

With the battleship game as an example,

- player actions are modelled as events like

MISSILE_FIREDand at sync server it enforces only player of the turn is able to commit a single action and advance the turn. - coordinates of grid and ship positions are stored in game state (as arrays), hit / miss are all derived from positions of missiles.

- also in the derived state, whenever battleships of a player is all sunk, we have a winner.

{ "type": "MISSILE_FIRED", "x": 1, "y": 1, "by": "player_1" }![[Pasted image 20251203233059.png]]

AI, AI everywhere

Of course, we need to talk about the AI opponent, which subscribes to events and makes a move when it is its turn. We can definitely use a heuristic strategy base on Math.random()for battleship, using a LLM is purely to demonstrate potential architectures working with AI. Basically each turn we prompt AI with game state for coordinates to hit, in structured output (JSON)

AI on the edge

Before exploring the local option, we also want to address the convenient option of cloud-based, state-of-the-art AI models. With Cloudflare Worker already acting as our hosting server and sync engine, it is a logical choice leveraging AI on the edge to avoid spinning up another server. Our game rides on the OpenAI compatible API with easy access to providers like Openrouter, Anthropic and DeepSeek. More than that, there are perks like dynamic routing with visual builder, which is highly effective to delegate different prompts to different models per application need.

https://developers.cloudflare.com/ai-gateway/usage/chat-completion/

AI on the browser

Chrome Prompt API is an experimental JavaScript API (window.ai.languageModel) that allows web pages to access a built-in LLM (currently Gemini Nano) directly in the browser . It’s local & offline, privacy-first and free, currently a draft proposal to the W3C Community group.

First time the chrome ai option is selected, browser will have to download the model. To get structured JSON output we pass schema to responseConstraint parameter.

const session = await LM.create({

outputLanguage: 'en',

language: 'en',

initialPrompts: [{ role: 'system', content: 'Please respond in English.' }],

});

const schema = {

type: 'object',

properties: {

x: { type: 'number' },

y: { type: 'number' },

reasoning: { type: 'string' }

},

required: ['x', 'y'],

additionalProperties: false

};

const response = await session.prompt('What is the capital of France?', {

outputLanguage: 'en',

responseConstraint: schema,

});Unchartered Ground

Much of the early discussion of local-first surronding has focused on offline capability and performance overcoming the 138ms+ latency limit imposed by internet cable, as we have seen in Figma or Linear. There is more to that. New architecture options for agentic systems that make me stop and ponder on.

For a turn-based game, global order is obviously necessary. In a popular variant of battleship, players continue firing until they miss. If we could check locally against coordinates ships, it is possible to further improve latency and synchronize multiple turns at once. For more serious application, that means working on encrypted values with homomorphic encryption.

In the broader sense of local-first, centralization is optional. In this 1vs1 game it is simpler to have a single party to decide orders of actions and enforce game rules. However, today it is also possible to reproduce a google doc-like experience with decentralized clients and local data, running CRDT on p2p network protocols. Users aren’t just humans behind screens anymore—they are agents running in browsers and on the edge, who need to collaborate async without a central authority.

Work done by agents are as good as the context they consume. Securing data and managing decentralized permissions for agents is a complicated but urgent problem requiring new infrastructure. It’s refreshing to see sync engines like Jazz are addressing this. By using crypto signaturethe chain of edits is verifiable like a blockchain, make it possible to check against the whitelist of who can read/write the values.

AI transformed the way software is built. On one hand, it’s about how to generate the best code, on the other, it is about how we want to architect our apps to work with Agents as first-class citizen, without sacrificing privacy, data sovereignty and user agency.

I had a stint hacking on crypto technologies; ironically, I find them better leveraged by the local-first communities rather than by the hype-driven crypto crowd. Sandboxed, verifiable computation on encrypted data is more essential than ever in the AI era.

It’s a simple little game, but my hunch is what I build in the future will look a lot like this.